These outputs are actually disabled by default. On the I/O faceplate of the unit, we get three display outputs. We did not have the bridge for this one when we had multiple A40’s but the feature is present. On the top of the unit, we get something that is fairly interesting in that we get a NVLINK connector. NVIDIA A40 Ariflow Bracket Mounting And 8 Pin That section also includes holes for vendor-specific mounting support brackets. We also get a standard 8-pin data center GPU power connector on the rear of the unit. As some frame of reference, the GPU with its PCIe I/O plate is around 1kg/ 2.2lbs so the heatsink has significant heft. NVIDIA A40 PCIe ConnectorĬooling on the GPU is passive, but NVIDIA says that the card is able to handle airflow in either direction so air can be pushed or pulled through the heatsink. We still get a standard PCIe Gen4 x16 edge connector on the card. NVIDIA A40 Front ViewĪs we expect, this is a newer PCIe Gen4 generation GPU, so it is likely to be paired with 3rd Generation Intel Xeon Scalable “Ice Lake” or AMD EPYC 7002 “Rome” or EPYC 7003 “Milan” CPUs. This uses NVIDIA’s gold theme and looks similar to many of the other NVIDIA PCIe GPUs of this generation that we have used. The A40 card itself is a double-width full-height full-length PCIe Gen4 GPU. We have already seen the A40 in a number of STH system reviews, but today, we decided it was time to give the unit its own piece.

#Ultra fp64 professional

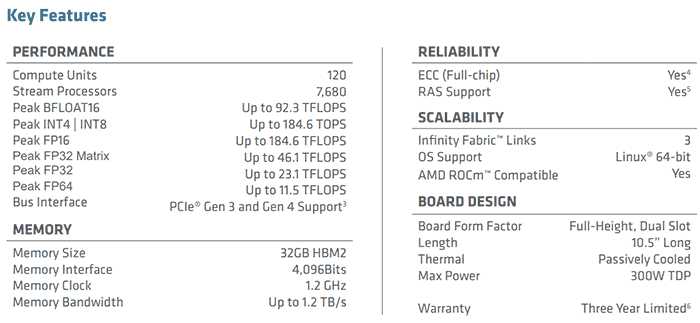

Furthermore, it has a 300W TDP making it a PCIe Gen4 GPU with a relatively high TDP that can be used in professional workstations and servers. The A40 is a 48GB GPU that is passively cooled, yet also has video outputs making it a very interesting card. Shader Model 5.1, OpenGL 4.5, DirectX 12.0, Vulkan 1.Today we are going to take a quick look at the NVIDIA A40 GPU.

#Ultra fp64 software

NVIDIA’s Pascal GPU technology enables the GP100 to run compute and graphics tasks simultaneously, allowing users to run simulations and create 3D models at the same time.

Users can connect two GP100 cards with NVLink to provide 32GB of HBM memory to run the largest simulations. With 16GB of HBM memory, larger simulations can fit into GPU memory.

0 kommentar(er)

0 kommentar(er)